Changelog

Wir arbeiten ständig an neuen Funktionen und Verbesserungen. Entdecke hier, was es Neues gibt:

Claude Opus 4.6

Anthropics Claude Opus 4.6 ist jetzt in Langdock verfügbar! 🚀

Claude Opus 4.6 ist ab heute in Langdock verfügbar. Anthropics neues Flaggschiff-Modell ist ein direktes Upgrade zu seinem Vorgänger Opus 4.5. Es ist deutlich leistungsfähiger bei Coding-, Reasoning- und Long-Context-Aufgaben.

Das Modell entscheidet selbstständig, wann eine Frage tieferes Nachdenken erfordert und wann eine schnelle Antwort ausreicht. Einfache Fragen sollten also deutlich schneller beantwortet werden, ohne dass bei schwierigeren Aufgaben die Antwort-Qualität verloren geht.

Mehr Details zum Launch und Modell gibt es in der Anthropic Launch-Ankündigung.

Wie Claude Opus 4.5 ist das Modell in zwei Versionen verfügbar: mit und ohne Reasoning.

Das Modell wird in der EU gehostet und ist ab sofort in allen Workspaces verfügbar.

Damit du direkt starten kannst, haben wir Opus 4.6 automatisch in allen Workspaces aktiviert, in denen Opus 4.5 ebenfalls aktiviert ist. Admins können den Modellzugang in den Workspace-Einstellungen verwalten.

Neue Labels und Übersicht für Agenten

Wir haben die Agentenseite neu gestaltet und Labels für Agenten hinzugefügt, damit ihr die passenden Agenten für eure Anforderungen einfacher entdecken, organisieren und finden könnt!

Wenn Teams wachsen und immer mehr Agenten erstellen, wird es schnell schwierig, den Überblick zu behalten. Die neuen Funktionen helfen euch, Agenten gezielt zu organisieren, zu filtern und die relevantesten schnell wiederzufinden - egal, ob ihr etwas sucht, das ihr gestern verwendet habt, oder entdecken möchtet, was euer Team erstellt hat.

Was ist neu?

1. Verbesserte Navigation

Die Agentenseite ist nun übersichtlich in klare Bereiche gegliedert, damit ihr schneller findet, was ihr sucht. Sie ist wie folgt aufgebaut:

- Hervorgehobene Agenten werden von Admins oben auf der Seite vorgestellt

- Kürzlich verwendete Agenten sind diejenigen, mit denen ihr zuletzt interagiert habt

- Kürzlich geteilte Agenten sind die, die mit euch vor Kurzem geteilt wurden

- Beliebte Agenten im Workspace sind Agenten, die euer Team besonders häufig nutzt

- Alle Agenten zeigt eure vollständige Agenten-Bibliothek

2. Erweiterte Filteroptionen

Wir haben auch Filter hinzugefügt, damit ihr eure Agenten leichter eingrenzen könnt. Filtern könnt ihr nach:

- Den Ersteller des Agenten

Die dem Agenten zugeordneten Labels

- Die im Agenten verwendeten Integrationen

- Mit wem der Agent geteilt wurde

- Ob der Agent von Admins verifiziert wurde

- Das Erstellungsdatum des Agenten

Wie gewohnt könnt ihr Agenten über die Suchleiste finden.

3. Agenten-Labels

Ihr könnt euren Agenten jetzt bis zu 3 Labels zuweisen, damit Andere sie leichter entdecken. Wählt aus Standard-Labels wie Marketing, Sales, HR und Engineering oder aus benutzerdefinierten Labels, die von euren Workspace-Admins erstellt wurden. Labels machen es einfach, Agenten nach Team, Anwendungsfall oder Funktion zu kategorisieren und zu filtern. Labels können unten in der Agenten-Konfiguration hinzugefügt werden.

4. Hervorgehobene Agenten

Workspace-Admins können jetzt bis zu 4 Agenten hervorheben, die für alle im Workspace oben auf der Agentenseite angezeigt werden. So lassen sich die am häufigsten verwendeten Agenten im gesamten Unternehmen leicht finden. Admins können auf die drei Punkte klicken, wenn sie mit der Maus über einen Agenten fahren und „Hervorheben“ auswählen.

Wir sind gespannt, wie ihr Labels und Filter nutzt, um eure Agenten besser zu organisieren! 🙌

Unternehmenswissen

Wir freuen uns, euch unser Feature Unternehmenswissen vorzustellen. Ab sofort könnt ihr alle eure verbundenen Datenplattformen direkt aus dem Langdock Chat durchsuchen.

Das Wissen eures Unternehmens kann oft über verschiedene Plattformen wie SharePoint, Google Drive, Outlook oder Teams verteilt sein. Langdock bringt alles zusammen. Mit Unternehmenswissen durchsucht ihr alle eure Plattformen gleichzeitig und erhaltet eine einzige, zusammengefasste Antwort.

Warum wir Unternehmenswissen entwickelt haben

Seit dem Launch unserer Integrationen verbinden viele Teams ihre Datenplattformen mit Langdock, um leistungsfähigere Agenten zu bauen. Dabei haben wir gemerkt, dass viele Nutzer nach Informationen suchen, die über mehrere Systeme verstreut sind. Das machte die Suche in der Vergangenheit sehr umständlich. Ab sofort könnt ihr alle verbundenen Tools gleichzeitig abfragen und bekommt eine Antwort mit direkten Links zu den ursprünglichen Quellen.

So nutzt ihr Unternehmenswissen

Suche aus dem Chat

Klickt auf den „Unternehmenswissen"-Button in der Eingabeleiste. Wählt die Plattformen aus, stellt eure Frage und erhaltet eine Antwort aus all euren verbundenen Integrationen.

Verfolgt die Suche in Echtzeit

Die Seitenleiste zeigt euch, was Langdock gerade macht: Welche Suchen laufen, welche Dokumente gelesen werden und wie die Antwort entsteht. Ihr könnt das Panel einklappen, wenn ihr nur das Ergebnis sehen wollt.

Quellen

Jede Antwort enthält Links zu den ursprünglichen Quellen. So könnt ihr die Informationen direkt in SharePoint, Google Drive oder dem jeweiligen Tool überprüfen.

Inhaltsbasierte Suche

Unternehmenswissen sucht nicht nur nach Dateinamen. Die Funktion öffnet Dokumente, analysiert den Inhalt und entscheidet, ob weitere Suchen nötig sind. So findet Langdock auch relevante Informationen, die eine einfache Stichwortsuche übersehen würde.

Berechtigungen bleiben erhalten

Unternehmenswissen respektiert außerdem eure bestehenden Zugriffsrechte. Ihr seht nur Ergebnisse aus Dokumenten, auf die ihr auf der jeweiligen Plattform Zugriff habt.

Unterstützte Integrationen

Workspace-Admins können in den Einstellungen festlegen, welche Integrationen für Unternehmenswissen verfügbar sind.

Ihr könnt folgende Plattformen durchsuchen:

- Google Drive

- OneDrive

- Sharepoint

- Confluence

- Gmail

- Outlook Email

- Slack

- Microsoft Teams

- Google Calendar

- Outlook Calendar

- Linear

Hinweis: Mit diesem Release haben wir die Chat-Eingabe aufgeräumt. Du findest die Buttons für Deep Research, Canvas und Websuche, wenn du links neben dem Eingabefeld auf das „+“ klickst.

Das ist unsere erste Version des Features. Wir arbeiten bereits an weiteren Integrationen und freuen uns auf euer Feedback! 🙌

Updated File Permissions

Wir aktualisieren die Funktionsweise von Berechtigungen von Ordnern und Dateien aus Tools wie Google Drive oder SharePoint in Agenten.

Bisheriges Berechtigungsverhalten

Bisher konnten alle Nutzer mit Zugriff auf einen Agenten auch alle synchronisierten Dateien des Agenten nutzen. Sie konnten damit arbeiten, selbst wenn sie in Google Drive oder SharePoint keine entsprechenden Berechtigungen hatten.

Die Folge war, dass Berechtigungen für den Ordnerzugriff an zwei Stellen verwaltet werden mussten: im Quellsystem (wie Google Drive oder SharePoint) und in Langdock.

Neues Berechtigungsverhalten

Nutzer können ab sofort nur noch auf Dateien und Ordner zugreifen, für die sie auch im Quellsystem berechtigt sind. Wenn jemand einen Agenten nutzt, aber keine Berechtigung für bestimmte Dateien hat, wird eine klare Meldung angezeigt, welche Dateien nicht verfügbar sind. Der Agent arbeitet dann nur mit den Dateien, für die der Nutzer berechtigt ist.

Warum das wichtig ist

Uns ist bewusst, dass diese Änderung die Funktionsweise einiger eurer Agenten beeinflussen kann. Gleichzeitig passt diese Aktualisierung die Ordner- und Datei-Synchronisierung der Funktionsweise von Agenten-Aktionen an: Nutzer brauchen ihre eigene Verbindung und können nur auf das zugreifen, wofür sie im Quellsystem berechtigt sind. Das macht Berechtigungen konsistent über alle Langdock-Integrationen hinweg und stellt sicher, dass eure Unternehmensdaten sicher bleiben.

Was ihr tun solltet

Wir haben bereits Nutzer, die Agenten mit angehängten Daten aus einer externen Quelle erstellt haben, per E-Mail über diese Änderung informiert. Hier sind unsere Empfehlungen für einen reibungslosen Übergang zum neuen Berechtigungsverhalten:

- Prüft Agenten, an die ihr Ordner oder Dateien aus Drittanbieter-Tools angehängt habt.

- Überprüft, wer Zugriff auf diese Agenten hat.

- Stellt sicher, dass alle Nutzer die richtigen Berechtigungen im Quellsystem haben.

Wir freuen uns auf euer Feedback! 🙌

- Verbesserte Chat-Sortierung: Suchergebnisse über "Chats durchsuchen" in der linken Navigation und über die Befehlsleiste werden jetzt nach Aktualität sortiert für relevantere Ergebnisse.

- Aussagekräftigere Chat-Titel: Chat- und Workflow-Titel sind jetzt beschreibender, kürzer und werden in der eingestellten Sprache des Nutzers generiert.

- Verbesserte Dateisuche: Native Dateiauswahl-Felder zeigen jetzt übergeordnete Ordner und vollständige hierarchische Pfade für leichtere Datei-Identifikation.

- Deep Research nach Nutzung deaktiviert: Nach Nutzung von Deep Research wird der Button jetzt deaktiviert, um nicht sofort eine neue Suche auszulösen. Hintergrund: Nutzer bevorzugen normale Prompts, um mit den Recherche-Ergebnissen weiterzuarbeiten und die Erkenntnisse zu verarbeiten.

- Gruppenmitglieder ausblenden: Gruppen-Admins und Workspace-Admins können die Mitgliederliste einer Gruppe aus Compliance-Gründen für deren Mitglieder ausblenden.

- Agenten-Nutzer-Feedback: Agent-Ersteller erhalten jetzt Benachrichtigungen, wenn andere Nutzer Feedback zu ihren Agenten hinterlassen.

- Import/Export von Agenten und Integrationen: Nutzer können jetzt Agenten und Integrationen per JSON-Dateien importieren und exportieren.

- Längerer Output von Aktionen: Der maximale Aktions-Output wurde von 150.000 auf 200.000 Zeichen erhöht.

- @-Aktionen in der Prompt-Bibliothek speichern: Nutzer können jetzt @-Aktionen in ihren gespeicherten Prompts hinterlegen. So kann vorab festgelegt werden, welchen Agenten, Wissensordner oder welche Integration verwendet werden.

- Senden-Button für kleine Bildschirme: Auf kleinen Bildschirmen erzeugt die Enter-Taste jetzt eine neue Zeile und sendet den Prompt nicht mehr ab. Auf großen Bildschirmen sendet Enter weiterhin den Prompt an das Modell.

- BYOK-Modell-Ersetzung: BYOK-Admins haben jetzt die Möglichkeit, beim Entfernen von Custom Models ein Ersatzmodell auszuwählen. Alle Agenten, Chats und Workflows werden automatisch auf das neue Modell aktualisiert. Besonders hilfreich beim Auslaufen von Modellen zugunsten neuerer Versionen.

- Neue MCP-Version unterstützt: Wir haben unsere MCP-Unterstützung auf Version 2025-11-25 aktualisiert, um zusätzliche MCP-Server zu unterstützen. Bisherige MCP-Server werden weiterhin unterstützt.

- Automatische MCP-Server-Erkennung: Beim Erstellen einer neuen MCP-Integration werden Servername und Icon jetzt automatisch von der URL abgerufen, ohne manuelle Eingabe.

Teilen von Projekten

Wir freuen uns sehr mitzuteilen, dass ihr jetzt Projekte teilen könnt. Dies ist eine Verbesserung, die seit der Veröffentlichung von Projekten im Juli häufig von euch gewünscht wurde! 🚀

Bisher waren Projekte eine gute Möglichkeit, eigene Chats zu organisieren und durch das Hinzufügen von Anweisungen und Dateien strukturiert an kleinere Projekte zu arbeiten. Durch die neue Teilen-Funktion machen wir Projekte zu einem leistungsstarken Tool für kollaboratives Zusammenarbeiten. Ihr könnt jetzt ganze Projektordner, einschließlich aller enthaltenen Chats, Dateien und Anweisungen, mit eurem Team oder ganzen Gruppen teilen.

Warum Projekte teilen?

Projekte waren schon immer hilfreich, um die eigene Arbeit zu organisieren. Aber wir haben gemerkt, dass viele von euch sie nutzen, um Arbeit zu strukturieren, die eigentlich gemeinsam erledigt wird. Zusammenarbeit findet oft im geteilten Kontext statt, nicht nur in einzelnen Nachrichten. Wenn ihr an Projekten wie einer Marketingkampagne, einem Forschungsthema oder einem Coding-Sprint arbeitet, müsst ihr oft das Gesamtbild teilen, inklusive Dateien, Anweisungen und Konversationen. Anstatt einzelne Chats miteinander zu teilen, könnt ihr nun eurem Team Zugriff auf den gesamten Kontext eines Projektes geben und somit ermöglichen, auf eurer Arbeit aufzubauen.

Kernfunktionen

Flexibles Teilen

Ihr könnt ein Projekt jetzt mit bestimmten Nutzern oder Gruppen innerhalb eures Workspaces teilen. Jeder mit Zugriff kann die Chats, Dateien und benutzerdefinierten Anweisungen innerhalb dieses Projekts einsehen. Das ist perfekt, um neue Teammitglieder in ein Thema einzuarbeiten oder alle auf dem gleichen Stand zu halten

Bessere Organisation

Um euch bei der Verwaltung von geteilten und privaten Projekten zu unterstützen, haben wir die Projekt-Seitenleiste aktualisiert und Filteroptionen in der Projektansicht hinzugefügt. Ihr könnt Chats jetzt ganz einfach nach „Von dir“ oder „Von anderen“ filtern, um euren Workspace übersichtlich zu halten.

Granulare Berechtigungen

Ihr behaltet die Kontrolle über eure Daten. Wenn ihr ein Projekt teilt, könnt ihr den Zugriff steuern, indem ihr die Rollen eurer Mitwirkenden festlegt. Editoren können das Projekt mit anderen teilen, den Projekttitel ändern, Anweisungen aktualisieren und angehängte Dateien verwalten. Sie können dem Projekt auch neue Unterhaltungen hinzufügen. Nutzer haben schreibgeschützten Zugriff. Sie können die Projektkonfigurationen sehen, alle Chats lesen und die Projektinhalte einsehen. Ob neue, oder alte, alle Unterhaltungen, die einem geteilten Projekt hinzugefügt werden, sind für alle Projektmitglieder sichtbar.

Wichtig: Unabhängig von eurer Rolle seid ihr die Einzigen, die eure eigenen Chats ändern, umbenennen oder löschen können, selbst wenn das Projekt gelöscht wird.

Projekte-Teilen ist ab heute verfügbar. Um ein Projekt zu teilen, öffnet euer Projekt und klickt auf den „Teilen“ Button rechts oben.

Wir sind gespannt auf euer Feedback und darauf, zu hören, wie ihr zusammenarbeitet! 🙌

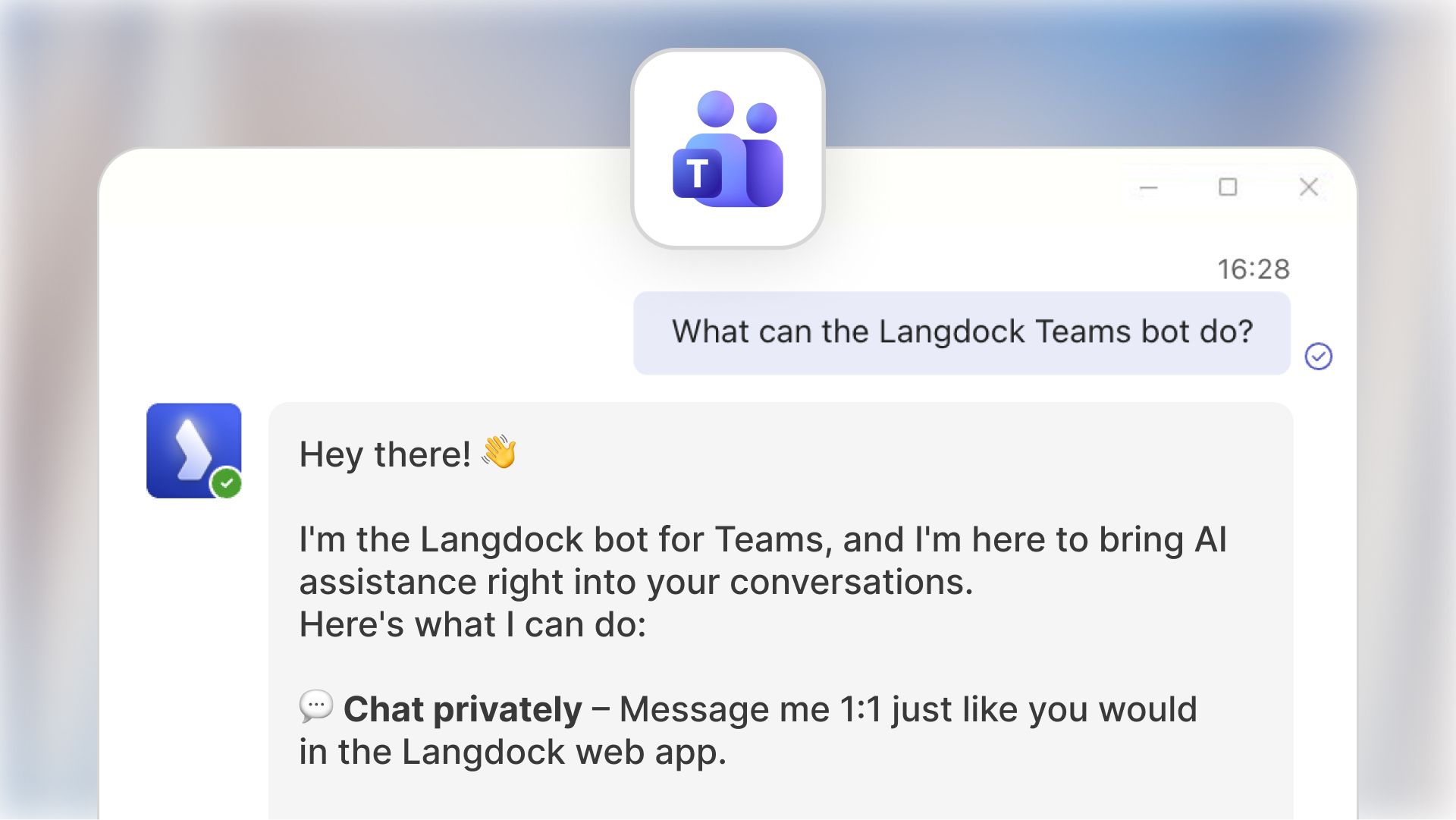

Microsoft Teams App

Wir bringen Langdock noch näher dahin, wo viele von euch täglich arbeiten, indem wir Langdock zu Microsoft Teams hinzufügen 🤝

Mit der neuen Microsoft Teams App kann euer Team Langdock-Modelle und Agenten jetzt direkt in Teams nutzen - in einem privaten Chat oder gemeinsam in Kanälen. Das hilft besonders bei kleinen Ad-hoc-Situationen im Alltag. Beispielhafte Anwendungsfälle sind das schnelle Übersetzen von Texten oder die Recherche zu kleineren Themen, ohne Langdock extra öffnen zu müssen.

So nutzt Ihr die Teams App

Private Unterhaltungen mit der Langdock App

Chattet 1:1 mit Langdock genau wie in der Web-App - voller Kontext, volle Funktionen, ohne Reibungsverluste. Klickt auf „Neuer Chat“ und gebt „Langdock“ ein, um einen Chat mit der App zu öffnen.

Zusammenarbeit in Kanälen mit @Langdock

Alternativ könnt ihr Langdock in jeden Kanal holen (Kanäle für ein bestimmtes Thema, erkennbar an einer Hauptnachricht und einem Thread mit Antworten auf diese Nachricht). Die App liest den gesamten Thread erst dann, wenn sie mit @ erwähnt wird, um euch beim aktuellen Thema zu unterstützen.

Mit der App in Chats arbeiten

Ihr könnt die App auch in Chats verwenden, die ihr mit anderen Nutzern habt. Hier könnt ihr die App ebenfalls mit @ markieren und dann euren Prompt hinzufügen. Das Modell kann sich in diesem Fall nur auf diese einzelne Nachricht beziehen, nicht auf den gesamten Chatverlauf.

Funktionen der Teams App

Auswahl Eures Standardmodells

Wenn Ihr die App in euren Chat holt, könnt ihr entweder das Standardmodell (z. B. GPT-5.1) verwenden oder einen spezifischen Agenten auswählen. Das Modell ist eine gute Standardwahl für schnelle Anforderungen wie Erklärungen oder kleinere Fragen.

Agentenauswahl innerhalb von Teams

Startet eine Unterhaltung mit jedem beliebigen Langdock-Agenten - von Übersetzern bis hin zu HR-FAQ-Agenten - direkt in Teams.

Datei- & Bildunterstützung

Ladet Dokumente oder Bilder in Teams-Nachrichten hoch - Langdock kann diese genau wie in der Web-App lesen und analysieren.

Warum wir die Teams App entwickelt haben

Viele Organisationen nutzen Microsoft Teams, um zusammenzuarbeiten und sich mit anderen auszutauschen. Viele Nutzer haben sich eine Möglichkeit gewünscht, Langdock in Teams zu nutzen, da sie die App ohnehin bereits mehrmals am Tag verwenden. Teams wird so zu einer natürlichen Erweiterung von Langdock, die eurem Team hilft, schneller zu kollaborieren und Agenten in alltägliche Workflows einzubinden - von der gemeinsamen Problemlösung bis hin zu spezialisierten Kanal-Setups.

Erste Schritte

Euer Workspace-Admin kann Langdock über das Teams Admin Center installieren. Nach der Installation könnt ihr einfach in den Teams-Apps nach Langdock suchen.

Für Details, schaut Euch gern den Setup Guide und die Anleitung zur Nutzung an.

Wir freuen uns sehr auf Euer Feedback! 🙌

Assistenten sind jetzt Agenten

Wir benennen Assistants in Agents um in Langdock! Diese Änderung spiegelt wider, wie sich das Produkt entwickelt hat und wie der Markt und viele von euch über KI sprechen.

Was ändert sich?

Nur der Name. Eure bestehenden Assistenten behalten alle ihre Funktionen, Konfigurationen und Freigabeeinstellungen. Alles funktioniert genau wie zuvor.

Warum Agenten?

Als wir Assistenten im Herbst 2023 gelauncht haben, hat der Markt noch an grundlegenden Funktionen gearbeitet, z.B. wie KI mit wenigen Dokumenten arbeitet. Seitdem haben die Fähigkeiten erheblich zugenommen. Was als relativ simple Chatbots begann, kann heute mit großen Datenbeständen umgehen, tabellarische Daten verarbeiten, sich mit euren Tools verbinden und vieles mehr. KI nutzt agentisches Verhalten, um für jede Aufgabe den richtigen Ansatz und die passenden Tools auszuwählen, und das alles innerhalb der von euch vorgegebenen Konfiguration.

Der Begriff „Agent" hat sich als Industriestandard für das etabliert, was wir früher Assistent nannten. Viele Unternehmen wie Microsoft und Google Enterprise AI verwenden diese Terminologie. Noch wichtiger: Wir haben in den letzten Monaten von vielen von euch gehört, dass eure Nutzer den Begriff „Agenten” gewohnt sind oder sogar fragen, ob Langdock Agenten anbietet.

Warum jetzt?

Diese Änderung kommt mit dem Launch von Workflows vor ein paar Wochen. Agenten und Workflows sind komplementäre Produkte: Agenten sind eure interaktiven KI-Unterstützung für spezifische Aufgaben, während Workflows es euch ermöglichen, mehrere Komponenten miteinander zu verketten (einschließlich mehrerer Agenten), um umfangreichere Prozesse im Hintergrund zu automatisieren.

Was bedeutet das für euch?

Ihr müsst nichts tun! Die Umstellung ist automatisch erfolgt, und wir haben die gesamte Dokumentation auf die neue Terminologie aktualisiert.

Die Assistant-API bleibt von den Änderungen vorerst unberührt. Es wird eine separate Benachrichtigung im nächsten Jahr zu den Veränderungen geben.

Die Langdock-Plattform besteht jetzt aus folgenden Produkten: Chat, Agenten, Workflows, Integrationen und API. Die Umbenennung eines Kernprodukts nehmen wir nicht auf die leichte Schulter. Wir haben sorgfältig darüber nachgedacht und mit vielen von euch gesprochen. Letztendlich ergab es für uns Sinn angesichts der Entwicklung von Produkt und Markt.

Wir freuen uns über euer Feedback, wie wir Agents für euch weiter verbessern können! 🙌

- Support für Gemini 3 Pro: Wir haben Gemini 3 Pro neu hinzugefügt. Das Modell benötigte einige zusätzliche Konfigurationsoptionen, um zu funktionieren. Derzeit ist das Modell nur als globales Deployment verfügbar und noch nicht als EU-gehostetes Modell. BYOK-Kunden können das Modell in der Modellkonfiguration hinzufügen. Für Kunden, die von Langdock angebotene Modelle nutzen, haben wir das Modell als globales Deployment hinzugefügt und Administratoren können es in den Workspace-Einstellungen aktivieren.

- Deaktivieren von Workflow-Nodes: Workflow-Editoren können jetzt Workflow-Nodes deaktivieren, indem sie auf die drei Punkte oben rechts klicken.

- Erstellen von Workflow-Trigger: Bisher musstet ihr berechtigt sein, um benutzerdefinierte Integrationen zu erstellen und Workflow-Zugriff haben, um Trigger für Integrationen zu bauen. Jetzt können alle Nutzer, die Integrationen erstellen dürfen, auch Trigger bauen. Ihr könnt zu euren benutzerdefinierten Integrationen gehen und den Trigger hinzufügen, wie ihr auch eine Aktion hinzufügen würdet.

- Aktualisierte MCP-Version: Wir haben unseren MCP-Support auf Version 2025-11-25 aktualisiert, um zusätzliche MCP-Server zu unterstützen. Bisherige MCP-Server werden weiterhin unterstützt.

- Ändern der Reihenfolge von Image-Modellen: Administratoren von BYOK-Workspaces können jetzt die Reihenfolge von Image-Modellen ändern. Das funktioniert genauso wie die Änderung bei der Reihenfolge von Modellen.

- Agenten-Formulare: Formulare in Agenten hatten bisher bis zu 15 Felder. Wir haben das Limit auf 25 Felder erhöht.

- API für Erstellen und Updaten von Agenten: Wir haben neue API-Endpunkte für die Erstellung und das Bearbeiten von Agenten gelauncht. Mehr dazu hier in der Assistenten-API-Dokumentation.

Workflow Improvements

Seit dem Launch von Workflows vor einigen Wochen hat unser Team zahlreiche Verbesserungen und Leistungssteigerungen umgesetzt, um die Nutzung noch einfacher und leistungsfähiger zu machen. Auf vielfache Feedback-Anfragen von euch haben wir den Fokus darauf gelegt, das Erstellen, Testen und Verfeinern von Workflows so einfach wie möglich zu machen. Vielen Dank nochmals für all euren Input - unten findet ihr unser Launch Video.

Hier ist eine Übersicht der wichtigsten Updates:

Neuer Node: Loop (Schleifen)

Mach deine Workflows intelligenter und schneller, indem du repetitive Aktionen automatisierst. Loop-Nodes ermöglichen es, eine Auswahl von Nodes mehrfach auszuführen, bevor zur nächsten Node übergegangen wird. Das hilft bei wiederkehrenden Aufgaben oder beim Iterieren über Listen.

Neuer Node: Bild generieren

Bildgenerierung funktioniert jetzt auch in Workflows. Dies ist ideal für visuelle Automatisierung und kreative Aufgaben, wenn du regelmäßig Bilder erzeugen musst. Wähle einfach die Node „Bildgenerierung“ und dein bevorzugtes Bildmodell, um loszulegen!

Fehlgeschlagene Ausführungen erneut starten

Wenn einmal etwas nicht nach Plan läuft, kannst du fehlgeschlagene Runs jetzt sofort erneut versuchen, ohne den gesamten Workflow neu zu starten.

Benutzerdefinierte Webhook-Antworten

Workflows können jetzt in Echtzeit auf eingehende Requests antworten. Perfekt, um sich mit Apps wie Slack zu synchronisieren oder andere Systeme sofort zu benachrichtigen.

Datei-Uploads via Webhooks

Für noch mehr Integrationsmöglichkeiten kannst du nun Dateien direkt aus externen Anwendungen in deine Workflows senden.

Seitenleisten-Pinning

So wie du Assistenten an die Seitenleiste anpinnen kannst, gibt es das jetzt auch für Workflows. Halte deine Lieblings‑Workflows mit nur einem Klick entfernt!

Workflow-Chat-Kontext

Du kannst das Verhalten deines Workflow-Chats personalisieren, indem du deine bevorzugten Tools, wie Gmail oder Outlook, HubSpot oder Salesforce, als Kontext dem Workflow‑Builder gibst. Öffne dazu einfach den Chat und klicke auf „Einrichten“, um anzupassen, wie Workflows für dich erstellt werden.

Workflow-Formular personalisieren

Beim Erstellen eines Workflow-Formulars kannst du jetzt das Einreichungserlebnis personalisieren. Passe Farbe, Beschreibung und Namen des Formulars an, indem du die Sektion „Customisation“ aufklappst.

Diese Verbesserungen helfen sowohl bei einfachen täglichen Aufgaben, wie Reminders oder Recherche, als auch bei komplexeren, mehrstufigen Prozessen. Wir freuen uns zu hören, was ihr davon haltet!

Verbesserte Verarbeitung von PDFs

Wir haben die Verarbeitung von PDF-Dateien verbessert, um visuelle Inhalte besser verarbeiten zu können.

Bisher haben wir beim Hochladen einer PDF-Datei in Langdock den Text extrahiert und an das KI-Modell gesendet. Damit werden sogenannte „Embeddings“ erstellt, um dem Modell zu ermöglichen, eine semantische Suche im Dokument durchzuführen. Auf diese Weise kann das Modell qualitative Antworten auf der Grundlage eurer hochgeladenen Dateien generieren.

Um diese Verarbeitung weiter zu verbessern, machen wir jetzt auch Screenshots von Seiten und stellen sie dem Modell zur Verfügung. Einfach ausgedrückt, gibt dies dem Modell „Augen”, sodass ihr nun Fragen wie „Beschreibe die Details der Grafik auf Seite 26” stellen und genaue Antworten erhalten könnt. Durch ein neues Tool “Seite ansehen” kann das Modell nun tatsächlich den Dokumentinhalt „sehen”, was insbesondere bei Bildern, Diagrammen, Logos und anderen visuellen Elementen hilfreich ist.

Wie immer freuen wir uns auf euer Feedback! 🙌

- Erhöhte Dateigröße: Wir haben die Zeichenbegrenzung für Dateigrößen von 4 Millionen auf 8 Millionen Zeichen erhöht.

- Projektlimit erhöht: Wir haben das Limit für die Anzahl der Projekte, die du erstellen kannst, von zehn auf 30 erhöht.

- Spracheingabelimit erhöht: Wir haben das Limit für die Dauer der Spracheingabe von fünf auf zehn Minuten erhöht.

- Leistungsverbesserungen: Unser Engineering-Team hat in den letzten Wochen umfangreiche Leistungsverbesserungen implementiert, die zu schnelleren Ladezeiten und flüssigerer Antwortgenerierung führen.

- Model Key Pooling: Bring-your-own-key-Kunden können jetzt Modell-Deployments in separaten Regionen bündeln. Dies hilft, die Rate Limits zu erhöhen und ermöglicht es einer anderen Region, als Fallback zu dienen, falls das Modell auf einen Fehler stößt.

- Aktualisierte Dokumentation: Im letzten Jahr wurden viele neue Funktionen zum Produkt hinzugefügt, und unsere Dokumentation ist entsprechend gewachsen. Wir haben die Struktur aufgeräumt, die Dokumentation ins Deutsche übersetzt und eine Dokumentation für Workflows veröffentlicht. Ihr findet die Dokumentation unter https://docs.langdock.com/.

Claude Opus 4.5

Anthropics Claude Opus 4.5 ist jetzt in Langdock verfügbar! 🚀

Dieses Modell zeichnet sich durch seine erweiterten Fähigkeiten für Coding, agentische Workflows und die Bearbeitung komplexer Aufgaben aus.

Claude Opus 4.5 bietet Verbesserungen im Reasoning und liefert klarere, leichter verständliche Antworten.

Mehr zu dem Modell könnt ihr in der Ankündigung von Anthropic lesen.

Wir haben Opus 4.5 automatisch in allen Workspaces aktiviert, in denen zuvor Claude Sonnet 4.5 genutzt wurde.

Wie auch bei Claude Sonnet 4.5 ist das Modell in zwei Versionen verfügbar, mit Reasoning und ohne Reasoning. So kannst du selbst entscheiden, ob das Modell für deine aktuelle Anfrage aktiv „mitdenken“ soll.

Neue GPT-5 & 5.1 Versionen

Wir haben GPT-5 und GPT-5.1 auf die neuesten Modellversionen von OpenAI aktualisiert. Diese ist eine dedizierte "Chat"-Version, die für Unterhaltungen optimiert ist.

Die neue Chat-Version liefert Antworten mit umfangreicherer Formatierung: bessere Struktur, klarere Listen und bessere Ausgaben, die leichter zu verstehen und umzusetzen sind. Dies sind dieselben Modellversionen, die derzeit in ChatGPT verwendet werden.

Sowohl die GPT-5- als auch die GPT-5.1 Chat Version werden in der EU gehostet. Die vorherigen GPT-5 und GPT-5.1 Modelle wurden geupdated und sind jetzt live. Wenn du die neueste GPT-5-Serie noch nicht ausprobiert hast, probiere sie aus!

GPT-5.1

Wir freuen uns, euch mitteilen zu können, dass GPT-5.1 jetzt in Langdock verfügbar ist! 🚀

Ab heute teilen wir die neueste Version der GPT-5-Serie: GPT-5.1, ebenfalls im Thinking-Modus verfügbar. Diese Version Verbessert sowohl die Intelligenz als auch den Kommunikationsstil.

Dieses Modell ist intelligenter, wärmer und besser darin, Anweisungen zu befolgen als GPT-5. Ihr werdet möglicherweise einen verspielteren Ton bemerken, gepaart mit strukturierteren Antworten.

Dieses Modell ist eine ausgezeichnete Wahl, wenn Ihr möchtet, dass es euren Anweisungen genau folgt und ausgefeilte Antworten liefert. Es ist schneller beim Lösen einfacher Aufgaben und gleichzeitig beharrlicher bei komplexen. Das Modell generiert klarere Antworten, indem es sich auf weniger technischen Fachjargon konzentriert.

Mehr zu dem Modell könnt ihr in der Ankündigung von OpenAI lesen.

Wie das vorherige GPT-5-Modell ist das Modell in zwei Modi verfügbar – einer für schnelle Antworten (GPT-5.1) und einer zum Nachdenken vor dem Antworten (GPT-5.1 Thinking).

Das Modell wird von Microsoft Azure innerhalb der EU gehostet und ist ab sofort für alle Workspaces verfügbar.

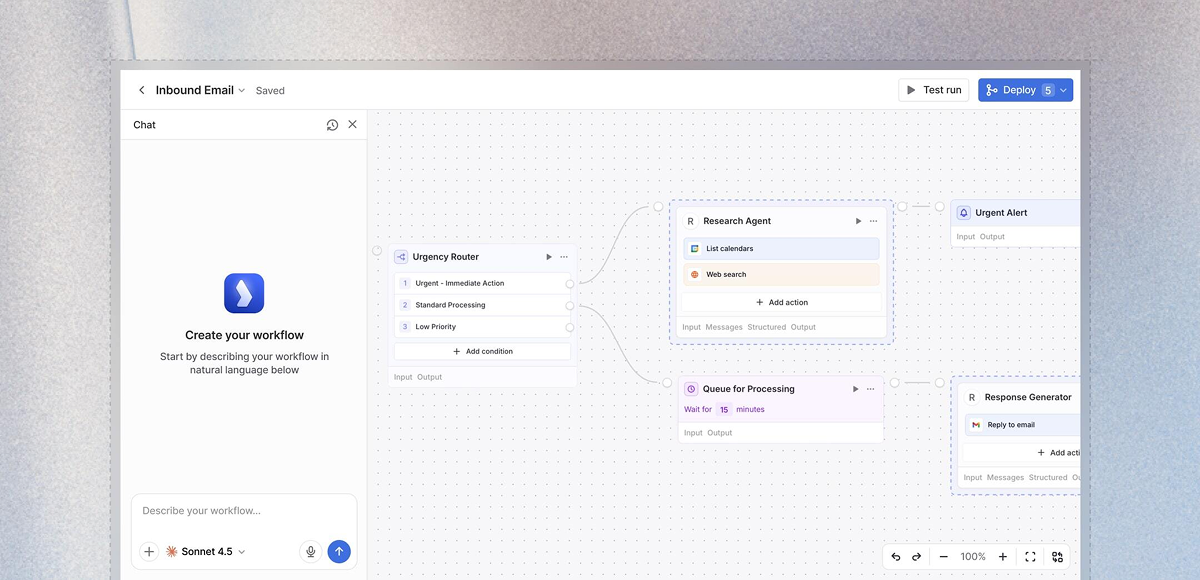

Workflows

Wir freuen uns, Workflows zu launchen – eine mächtige neue Art, KI-gesteuerte Automatisierungen in Langdock zu erstellen! 🚀

Workflows bringen alles zusammen, was ihr bereits in Langdock nutzt – Chat, Assistants und Integrationen – zu vollumfänglichen Automatisierungen. Stellt es Euch als Eure Orchestrierungsebene vor, wo Ihr mehrere Schritte verketten, Logik hinzufügen und ausgeklügelte Prozesse erstellen könnt, die automatisch laufen.

Was sind Workflows?

Workflows ermöglichen es Euch, komplexe, mehrstufige Automatisierungen ohne Code zu erstellen. Sie können durch ausgefüllte Formulare, einen Zeitplan oder ein Ereignis in euren verbundenen Apps ausgelöst werden und Workflows von Anfang bis Ende durchführen – automatisch und zuverlässig.

Hauptfunktionen:

- Mehrstufige Automatisierung: Verkettet Assistenten, Integrationen und benutzerdefinierte Logik zu ausgeklügelten Workflows.

- Flexible Trigger: Startet Workflows manuell, nach Zeitplan, via Webhook oder durch Formularübermittlungen.

- Bedingte Logik: Fügt deterministische Wenn-Dann-Bedingungen, Schleifen und Entscheidungsbäume hinzu, um komplexe Szenarien zu handhaben.

- Human-in-the-Loop: Bezieht manuelle Genehmigungsschritte ein, wenn für kritische Entscheidungen menschliches Überprüfen benötigt wird.

- KI bei jedem Schritt: Nutzt verschiedene KI-Modelle in eurem gesamten Workflow, um zu analysieren, zu entscheiden und sich anzupassen.

- Kostenmanagement: Überwacht und kontrolliert Workflow-Ausgaben mit eingebauten Kostenlimits und Nutzungsverfolgung.

Warum Workflows?

Mächtiger als Chat: Während Chat perfekt für interaktive Unterhaltungen ist, automatisieren Workflows ganze Prozesse im Hintergrund. Richtet sie einmal ein, und sie laufen zuverlässig 24/7.

Flexibler als Assistenten: Assistenten sind großartig für spezifische Aufgaben, aber Workflows lassen Euch mehrere Assistenten kombinieren, benutzerdefinierte Logik hinzufügen, mit externen APIs integrieren und ausgeklügelte Entscheidungsbäume erstellen.

Mehr als Integrationen: Integrationen verbinden eure Apps, aber Workflows orchestrieren komplexe Prozesse über diese Apps hinweg – mit KI bei jedem Schritt.

Beispiel-Anwendungsfälle

- Automatische Analyse und Weiterleitung von Kundensupport-Tickets basierend auf Inhalt und Dringlichkeit.

- Generierung wöchentlicher Berichte durch Abrufen von Daten aus mehreren Quellen, Analyse von Trends und Verteilung der Ergebnisse.

- Verarbeitung von Formularübermittlungen mit KI-Überprüfung, bedingten Genehmigungsabläufen und automatisierten Folgeaktionen.

- Überwachung von Datenquellen nach Zeitplan und Auslösung von Aktionen, wenn spezifische Bedingungen erfüllt sind.

Erste Schritte

Workflows sind jetzt in Langdock verfügbar! So könnt ihr beginnen:

- Workflows müssen von einem Admin in den Workspace-Einstellungen aktiviert werden.

- Navigiert zum Workflows-Bereich in eurer Seitenleiste.

- Erstellt euren ersten Workflow mit unserem visuellen Builder.

- Wählt einen Trigger, fügt verschiedene Schritte hinzu und verbindet eure Logik.

Wichtige Hinweise:

- KI-Nutzung in Workflows wird über API-Preise abgerechnet, und je nach Anzahl der Ausführungen benötigt Ihr möglicherweise ein Abonnement-Upgrade (Preisdetails hier).

- Setzt Kostenlimits und aktiviert Monitoring, um die Ausgaben zu kontrollieren.

- Alle Workflow-Ausführungen werden für Transparenz und Debugging für Workflow-Ersteller protokolliert.

Wir freuen uns darauf, zu sehen, welche Prozesse ihr mit Workflows baut! Wie immer freuen wir uns auf euer Feedback. 🙌

Verbesserte Gruppen-Berechtigungen

Wir haben die Gruppenberechtigungen verbessert, um eine intuitivere Freigabe und Berechtigungsverwaltung für Prompts, Assistenten und Wissensordner innerhalb Ihres Teams zu ermöglichen.

Bisher konnten alle Gruppenmitglieder, Editors und Admins Prompts, Assistants und Wissensordner mit der gesamten Gruppe teilen, was oft zu unübersichtlichen Gruppen und unklaren Berechtigungsstrukturen führte.

Die wichtigsten Änderungen

- Ab sofort können Gruppen-Members auf alle mit der Gruppe geteilten Prompts, Assistants und Wissensordner zugreifen und diese nutzen, können aber keine neuen Ressourcen selbst teilen.

- Die Editor-Rolle ermöglicht es Benutzern, zur Gruppe beizutragen, indem sie Prompts, Assistants und Wissensordner teilen.

- Gruppen-Admins behalten die vollen Gruppenverwaltungsrechte.

- Neue SCIM-Gruppeneinstellungen ermöglichen es Workspace-Admins, das Rollenmanagement in synchronisierten Gruppen effektiv anzupassen

Warum haben wir das geändert?

Mit diesem Update der Gruppenrollenberechtigungen reduzieren wir Unordnung in großen Gruppen, indem wir einschränken, wer Ressourcen teilen kann. Darüber hinaus trennen wir nun klar zwischen dem Beitragen zu einer Gruppe (Editor) und dem Verwalten einer Gruppe (Admin).

Detaillierte Berechtigungsübersicht

Members:

- Können Gruppendetails sehen und Prompts, Assistants und Wissensordner nutzen

- Können nicht mit der Gruppe teilen

Editors:

- Können alles, was Members können

- Können Prompts, Assistants und Wissensordner mit der Gruppe teilen

- Können die Gruppe nicht umbenennen oder Mitglieder/Rollen verwalten

Admins:

- Können alles, was Editors können

- Können Benutzerrollen ändern und Benutzer hinzufügen/entfernen

- Können Gruppe umbenennen und Gruppenbeschreibung ändern

- Können Gruppe löschen

Weitere Informationen findest du in unserer Dokumentation.

Wie betrifft mich das?

Sharing-Flow: Du siehst weiterhin alle deine Gruppen beim Teilen von Prompts, Assistants oder Wissensordnern. Wenn Du Editor oder Admin sind, kannst du Resourcen mit der Gruppe teilen. Wenn du Member bist, wird die Gruppe ausgegraut und eine Info-Nachricht erklärt welche Berechtigungen dir zum Teilen fehlen.

Gruppenmitglieder wurden zu Editoren migriert: Benutzer, die vor dem Update Member einer Gruppe waren, haben jetzt die Editor-Rolle. Da die neue Editor-Rolle dieselben Freigabeberechtigungen hat wie die alte Member-Rolle, ändert sich nichts an zuvor geteilten Prompts oder Assistants.

Admin-Tools und SCIM-Standardeinstellungen: Wir haben Admin-Einstellungen und -Tools hinzugefügt, die die Synchronisierung von SCIM-Gruppen mit diesen neuen Berechtigungen deutlich einfacher machen:

- Lege die Standardrolle für neu synchronisierte SCIM-Gruppen in den Sicherheitseinstellungen fest

- Lege die Standardrolle für neue Benutzer fest, die zu einer bestimmten SCIM-Gruppe hinzugefügt werden

- Verwende unsere Rollenkonvertierungs-Funktion, um die Rollen aller Members oder Editors in Gruppen zu ändern

Weitere Details findest du in unserer Dokumentation hier.

Wir freuen uns auf euer Feedback, wie diese neuen Berechtigungsstufen die Zusammenarbeit in Ihrem Workspace verbessern! 🙌

- Automatisches Model-Fallback: Wenn dein ausgewähltes Modell aufgrund von Provider-Problemen nicht verfügbar ist, wechseln wir automatisch zu einem Backup-Modell, damit du immer eine Antwort erhältst.

- Verbesserter Login-Flow: Gib einfach deine E-Mail ein und wir zeigen dir alle relevanten Login-Optionen oder leiten dich in SAML-Workspaces direkt zu deinem Identity Provider weiter.

- Assistentennamen beim Hovern: Wenn du Assistenten via @ in einem Chat verwendest, siehst du jetzt den Namen des Assistenten, wenn du über das Tag hoverst.

- Slack-Bot Bildunterstützung: Unser Slack-Bot kann jetzt Bilder lesen und verstehen, die mit ihm geteilt werden.

- Microsoft Teams 1:1-Nachrichten: Sende direkte 1:1-Chat-Nachrichten in Teams an deine Kollegen, auch an solche, mit denen du noch keinen Chat begonnen hast.

- Verbesserte Google Drive-Dateisuche: Wenn du Dateien von Google Drive direkt in den Chat oder ins Assistant-Knowledge über den „Datei auswählen"-Button hochlädst, findest du jetzt relevantere Ergebnisse.

- Outlook-E-Mail Antworten: Du kannst jetzt direkt über die Outlook-Integration auf E-Mails antworten.

- Zusätzliche Dateitypen: Du kannst jetzt Parquet-, .msg-, .one-, .vsdx- und .svg-Dateien im Chat hochladen und verwenden.

- Copy-to-Clipboard-Button für Prompts: Kopiere Prompts schnell in deine Zwischenablage mit dem neuen Kopier-Button.

- MCP-Verbesserungen: Verbinde dich mit MCP-Servern über erweiterte OAuth-Logik und Custom Headers.

- API-Key-Management: API-Keys haben jetzt Scopes für bessere Sicherheit und Zugriffsverwaltung.

Nano Banana in der EU

Wir freuen uns, dass Gemini 2.5 Flash Image (Nano Banana) jetzt auch in der EU verfügbar ist! 🚀

Das Bildgenerierung Modell von Google wurde seit dem Launch sehr viel angefragt. Es ist eines der aktuell besten Bildgenerierungsmodelle und unterstützt Bildbearbeitung.

Leider war es bisher nicht in der EU verfügbar. Jetzt bietet Google das Modell als EU-gehostete Option an, weshalb wir das Modell hinzugefügt haben und als neues Standard-Bildmodell hinterlegt haben.

Die wichtigsten Highlights sind:

- Bildbearbeitung: Ladet Bilder hoch und passt sie mit Prompts an – fügt Elemente hinzu, entfernt sie, ändert Farben oder transformiert den Stil

- Hohe Bildqualität: Präzise Darstellung von Details und zuverlässige Umsetzung eurer kreativen Ideen

Ihr könnt euer persönliches Standard-Bildmodell jederzeit in den Account-Einstellungen unter Präferenzen anpassen.

Das Modell ist ab jetzt live und wir freuen uns auf euer Feedback